Jay Test Article – 1

Uncategorized Elementor #241071 Published May 7, 2025 in Uncategorized • 12 min read DownloadSave Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo....

by Alexander Fleischmann , Öykü Işık, Sarah E. Toms Published January 23, 2025 in Artificial Intelligence • 8 min read

According to the World Economic Forum, Generative AI (GenAI) will achieve near-universal integration in business in the coming 18 months, transforming operations and unlocking massive productivity gains that are set to add trillions of dollars to the global economy. Simply put, GenAI is a game-changer. But even as organizations around the world look to harness the transformational potential of this technology, they must also act now to address attendant risks; among these, the risk that bias in GenAI training data, development processes, and outputs can perpetuate and exacerbate injustice, inequity, and unfairness in our society.

We have co-authored a white paper in collaboration with Microsoft and the Ringier AG EqualVoice Initiative that constitutes a clarion call to businesses and leaders around the world to take the risk of bias in GenAI seriously and take affirmative action to address it within their organizations.

Launched at the World Women Davos Agenda 2025, Mind the Gap: Why bias in GenAI is a diversity, equity, and inclusion challenge that must be addressed now sets out to:

Bias can find its way into GenAI mostly via real-world training data that mirrors existing societal inequities and discrimination, as well as skewed data sets that represent privileged segments of society. Unchecked, this bias can become ingrained into development processes which remain overly represented by one demographic group: white men. A lack of diversity in design, building, and testing processes can undermine an organization’s ability to spot bias. Once biased outputs are deployed and make their way into the real world, they have the potential to perpetuate stereotypes, helping to widen existing gaps in fairness, representation, access, and opportunity. As GenAI continues to evolve and integration intensifies, these outputs can re-enter training data, potentially creating a biased feedback loop in which discrimination, exclusion, and unfairness are amplified.

In 2024, UNESCO published research showing that GenAI systems associate women with terms like “home,” “family,” and “children” four times more frequently than men.

For society, this can be problematic. In 2024, UNESCO published research showing that GenAI systems associate women with terms like “home,” “family,” and “children” four times more frequently than men. Meanwhile, names that sound male are linked to terms like “career” and “executive.” EqualVoice used GenAI image generators in 2024 to test for bias. They found a correlation between prompts and stereotypes: “CEO giving a speech” engendered men 100% of the time and 90% of these were white men. The prompt “businesswoman” yielded images of women that were 100% young and conventionally attractive. Again, 90% of these were also white.

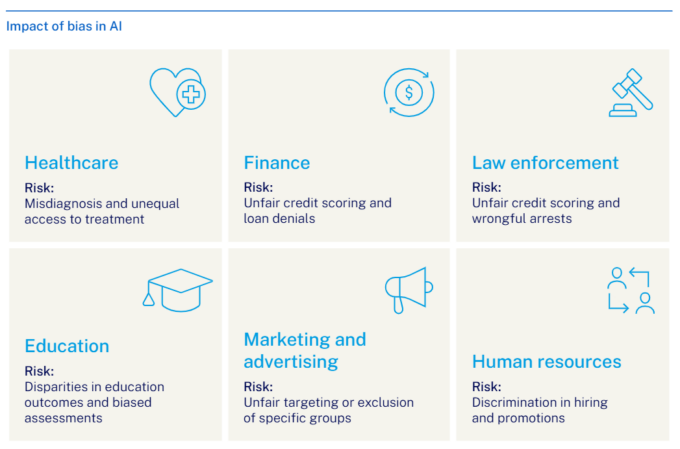

While this is troubling at a societal level, at the level of individual organizations, bias in GenAI can also constitute a serious threat.

Businesses using the technology to help map market segments, design and produce products, and engage with customer bases are at risk of suboptimal risk management and decision-making unless they introduce effective approaches, measures, checks, and balances to mitigate bias. Innovation and growth can be undermined, and opportunities might be missed when organizations fail to integrate diverse user needs, priorities, and perspectives. Brand reputation and loyalty suffer when organizations fail to uphold ethical standards and societal values. And in a world where integration is accelerating, it is only natural that laws and guidelines around the use of GenAI will intensify. Organizations found wanting in the regulatory context are likely to face increasingly stringent financial and operational consequences.

Our white paper shares the findings of a survey that we conducted with our co-authors at the end of 2024. The organizations and leaders that we consulted shared some critical insights.

As organizations expedite the wholesale integration of GenAI into everyday systems and processes, we believe this situation to be untenable. And we believe it should be a major concern to decision-makers.

Microsoft is committed to responsible AI and ours is an approach that ensures AI systems are developed and deployed in a way that is ethical and beneficial for society. What is critical is that responsible AI is not seen as a filter to be applied at the end, but instead a foundational and integral part of the development and deployment process.Ann Jameson, Chief Operating Officer, Microsoft Switzerland

Our employees are trained to assess and mitigate risks, and work with multi-disciplinary teams to review and test using red-teaming and risk simulation. This is an approach that we share in the white paper and with the public in general, to help inform other organizations in their own responsible AI efforts.

Moving the needle on bias in GenAI won’t be easy. GenAI is a hyper-complex technology and prone to black-box internal mechanisms that make its decision-making hard to scrutinize. Its ability to generate seemingly endless creative results and outputs means that bias can surface in different ways, some obvious and some less so. Meanwhile, the technology itself is scaling so fast it can be hard to keep pace. Even so, we believe it is imperative that organizations act on this issue – and that they do so without delay.

We believe that the right way to remediate the risk of bias in GenAI is by taking a pan-organizational approach: an approach predicated on organizational values and a set of clear and actionable responsible AI principles or tenets. Key to this approach is de-siloing the organization to fully leverage its diverse knowledge, skills, perspectives, and capabilities, and where appropriate, looking to the broader ecosystem for external input and outlook. The right governance mechanisms must be enacted and communicated across the organization, and we believe that diversity, equity, and inclusion functions along with inclusive leadership, psychological safety, and ongoing training and education have critical and integrative roles to play in building awareness and a sense of shared ownership in addressing bias in GenAI.

To this end, our white paper shares detailed insights and recommendations that focus on three key spokes:

We are at a critical crossroads with GenAI. As we increasingly adopt this new technology, we have to consider whether we simply want it to help us do more – or to help more of us do more. Do we want it to help us build a world in which more of us can compete and contribute on a more equitable footing? If the answer is yes, then leaders and decision-makers must take decisive action on bias in GenAI and do so without delay.Annabella Bassler, Chief Financial Officer, Ringier AG Initiator of the EqualVoice Initiative

GenAI has the potential to revolutionize the ways that we work, produce, communicate, and collaborate. But will it help make our organizations, our stakeholder communities, and our society fairer and more equitable, or not? Will GenAI help us safeguard the values of diversity, equity, and inclusion going forward or will it reflect and, worse, amplify the already egregious gaps in access, representation, and opportunity that undermine progress and innovation?

We are at an inflection point in shaping the kind of GenAI-powered future we want to see. And the time to step up and shape that future is now.

Please find out more about the problem of bias in GenAI and what your organization can do to address it. Visit this page to download your copy of Mind the Gap: Why bias in GenAI is a diversity, equity, and inclusion challenge that must be addressed now.

Discover the work of IMD in digital transformation and AI.

Equity, Inclusion and Diversity Research Affiliate

Alexander received his PhD in organization studies from WU Vienna University of Economics and Business researching diversity in alternative organizations. His research focuses on inclusion and how it is measured, inclusive language and images, ableism and LGBTQ+ at work as well as possibilities to organize solidarity. His work has appeared in, amongst others, Organization; Work, Employment and Society; Journal of Management and Organization and Gender in Management: An International Journal.

Professor of Digital Strategy and Cybersecurity at IMD

Öykü Işık is Professor of Digital Strategy and Cybersecurity at IMD, where she leads the Cybersecurity Risk and Strategy program and co-directs the Generative AI for Business Sprint. She is an expert on digital resilience and the ways in which disruptive technologies challenge our society and organizations. Named on the Thinkers50 Radar 2022 list of up-and-coming global thought leaders, she helps businesses to tackle cybersecurity, data privacy, and digital ethics challenges, and enables CEOs and other executives to understand these issues.

Chief Innovation Officer

Sarah Toms is Chief Innovation Officer at IMD. She leads information technology, learning innovation, Strategic Talent Solutions, and the AI Strategy. A demonstrated thought leader in education innovation, Sarah is passionate about amplifying IMD’s mission to drive positive impact for individuals, organizations, and society.

She previously co-founded Wharton Interactive, an initiative at the Wharton School that has scaled globally. Sarah has been on the Executive Committee of Reimagine Education for almost a decade, and was one of the ten globally to be selected as an AWS Education Champion. Her other great passion is supporting organizations who work to attract and promote women and girls into STEM.

She has spent nearly three decades working at the bleeding edge of technology, and was an entrepreneur for over a decade, founding companies that built global CRM, product development, productivity management, and financial systems. Sarah is also coauthor of The Customer Centricity Playbook, the Digital Book Awards 2019 Best Business Book.

May 7, 2025 • by David Bach in Artificial Intelligence

Uncategorized Elementor #241071 Published May 7, 2025 in Uncategorized • 12 min read DownloadSave Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo....

April 18, 2025 • by Frederik Anseel in Artificial Intelligence

As AI transforms industries, preparing young people for the future requires more than just mastering tools like ChatGPT. Success lies in developing deep knowledge, critical thinking, and the vision to understand how...

Audio available

Audio available

April 8, 2025 • by Didier Bonnet, Achim Plueckebaum in Artificial Intelligence

A good AI use case results from a ‘matching exercise’ where value is found at the intersection of data sets and business problems and opportunities. This can be hard to achieve, but...

Audio available

Audio available

March 31, 2025 • by Michael Yaziji in Artificial Intelligence

AI carries the threat of bringing to life the chilling visions of Orwell and Huxley. We must work hard to fight against the convergence of micro-surveillance and digital inertia....

Audio available

Audio availableExplore first person business intelligence from top minds curated for a global executive audience