Map and create an inventory of AI Agents.

A structured, step-by-step approach is essential to deploy AI agents safely and effectively. The first step is to map and create an inventory of all AI agents and their associated data flows. CDOs and CIOs should document each agent’s inputs and outputs, identifying which systems it interacts with and the information it processes. This detailed mapping will reveal any emerging data streams, overlaps, or silos. Organizations can then address gaps or unnecessary duplication, ensuring no agents operate under the radar, and that sensitive data access is tightly controlled.

Monitor agents and their data quality.

Setting clear standards for agent-generated data, such as accuracy, completeness, and timeliness, can prevent agents from injecting faulty information into enterprise systems. This may involve adopting data dictionaries, integration pipelines, and validation checks to unify both human and agent-created data in a single source of truth. Also, continuous monitoring and auditing is critical – AI agents must be tracked like high-privilege users, with automated logs, anomaly alerts, and regular performance reviews. These measures will help detect security threats, compliance breaches, and quality issues before they escalate.

Model a structured AI governance framework.

Governance policies should outline how agents are approved, deployed, and managed, ensuring that risk assessments, ethical guidelines, and security considerations are built into every stage. Like the technology itself, governance protocols are constantly emerging and evolving. The Model Context Protocol (MCP), popularized by Anthropic, establishes a universal, open standard for connecting AI systems with data sources. This framework enables organizations and individuals to seamlessly integrate their AI systems with the data they require, streamlining access and improving operational efficiency. Some companies establish a dedicated Center of Excellence (CoE) that brings together cross-functional teams, including legal, security, and IT, to oversee agent-related processes. This centralized approach keeps AI expansions controlled, prevents reactive fixes, and provides a shared framework for addressing complexities like regulatory compliance and bias mitigation.

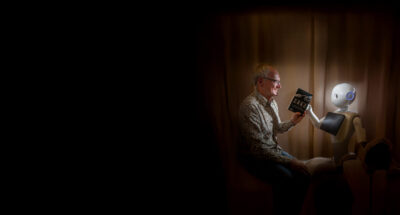

Manage via human oversight: Define an ‘Agent Controller’ role.

Organizations may introduce roles such as an “Agent Controller” or “AI Wrangler” (reporting to the CDO or CIO) to supervise day-to-day agent operations, handle exceptions, and

continuously refine agent performance. By clearly assigning accountability, companies avoid confusion over who monitors the AI’s outputs, retrains the model, or responds to malfunctions. As agents proliferate, organizations may soon have more AI agents than employees, necessitating an ‘Agent HR’ department, led by a Chief Agent Officer.

Audio available

Audio available